How to Create A Cloud Dataflow Pipeline Using Java and Apache Maven

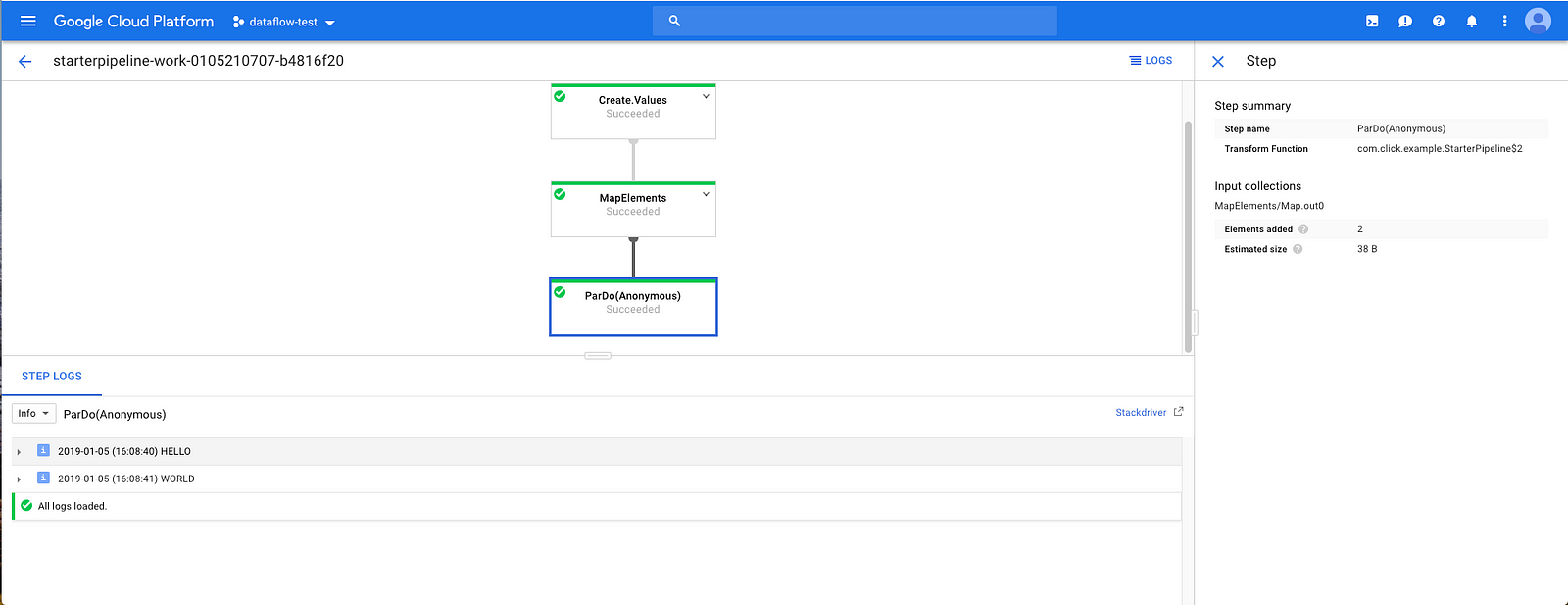

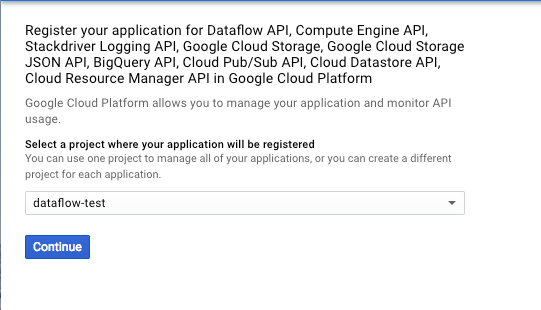

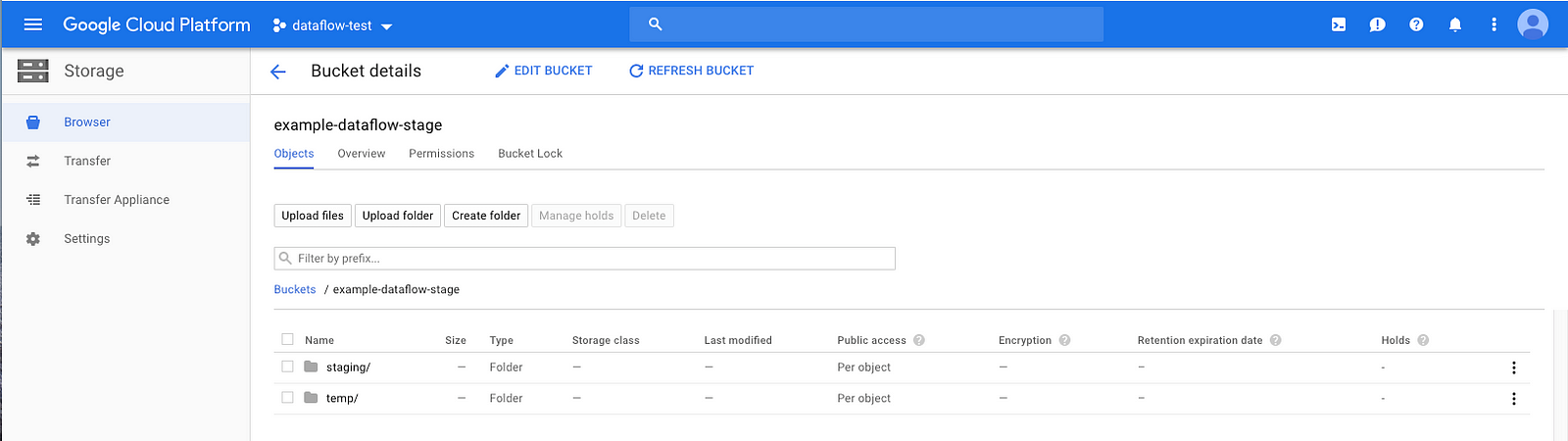

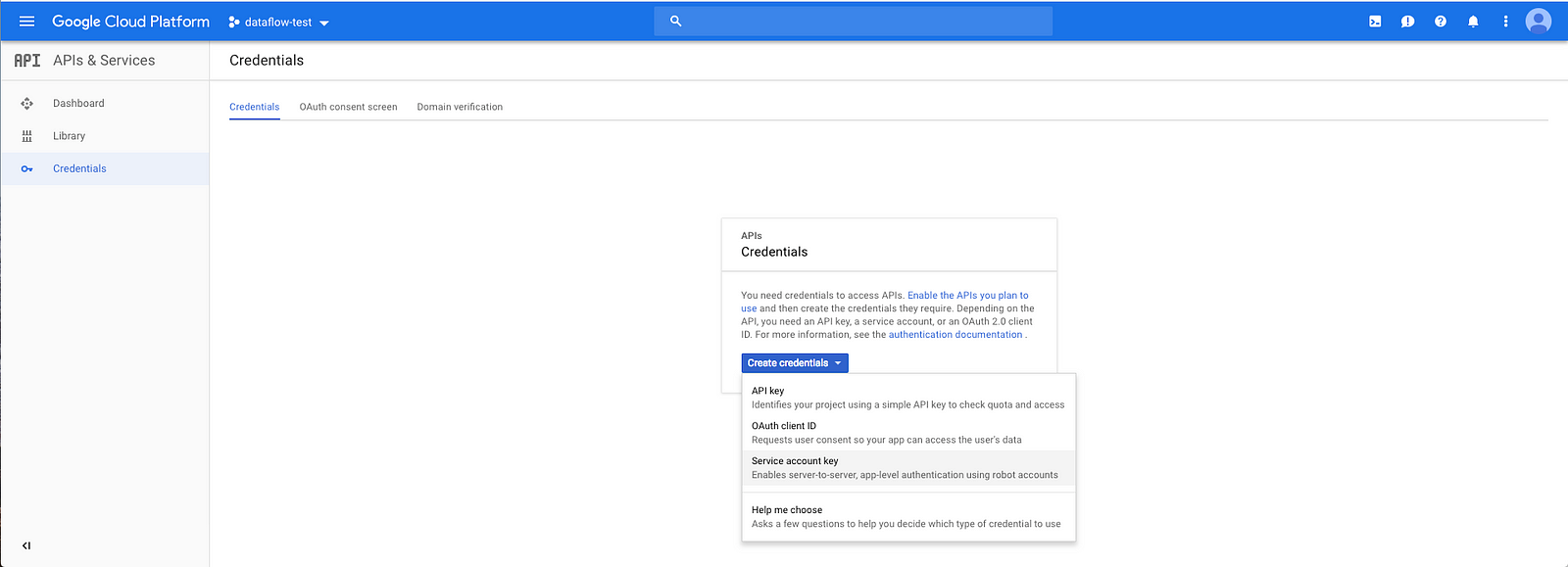

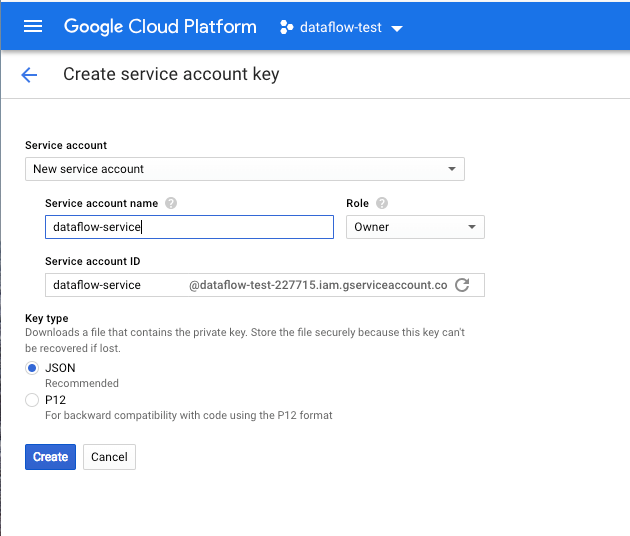

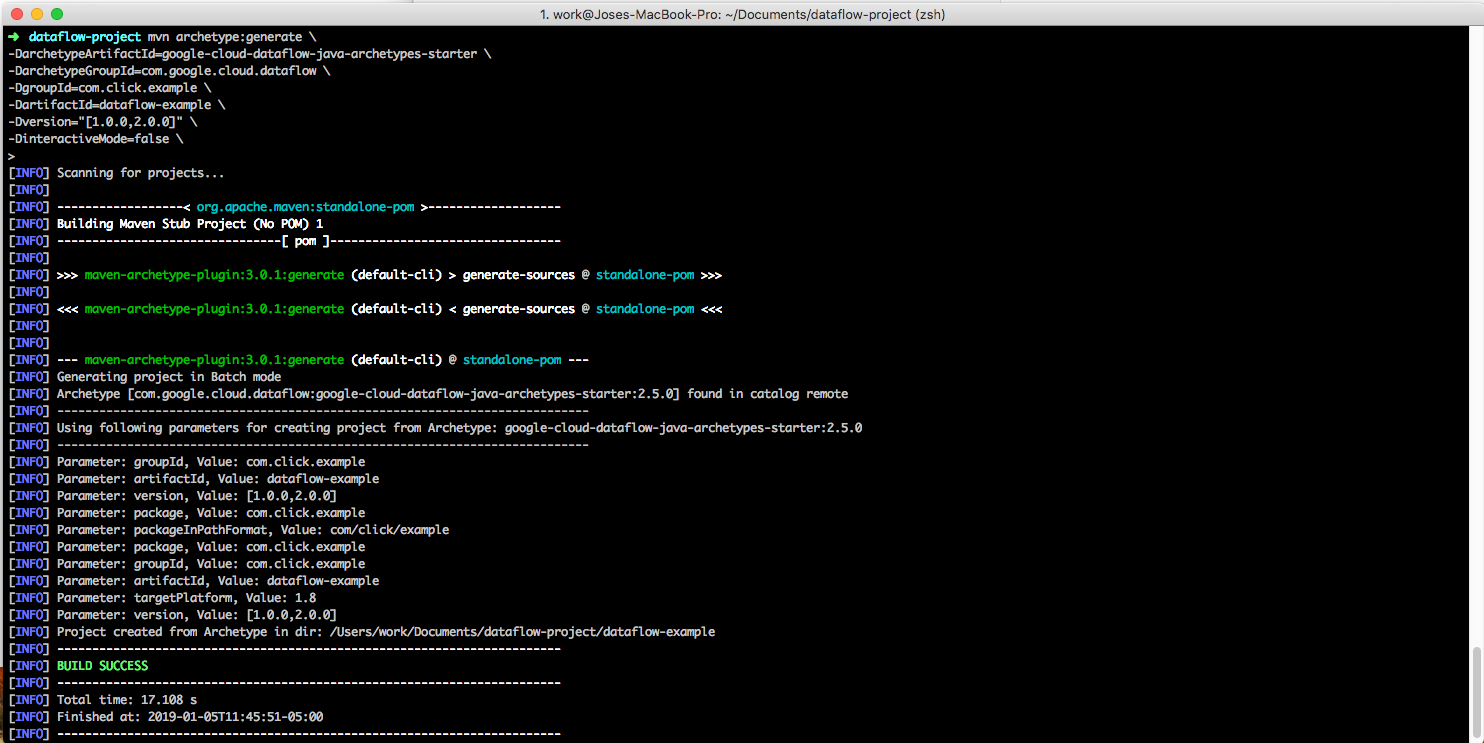

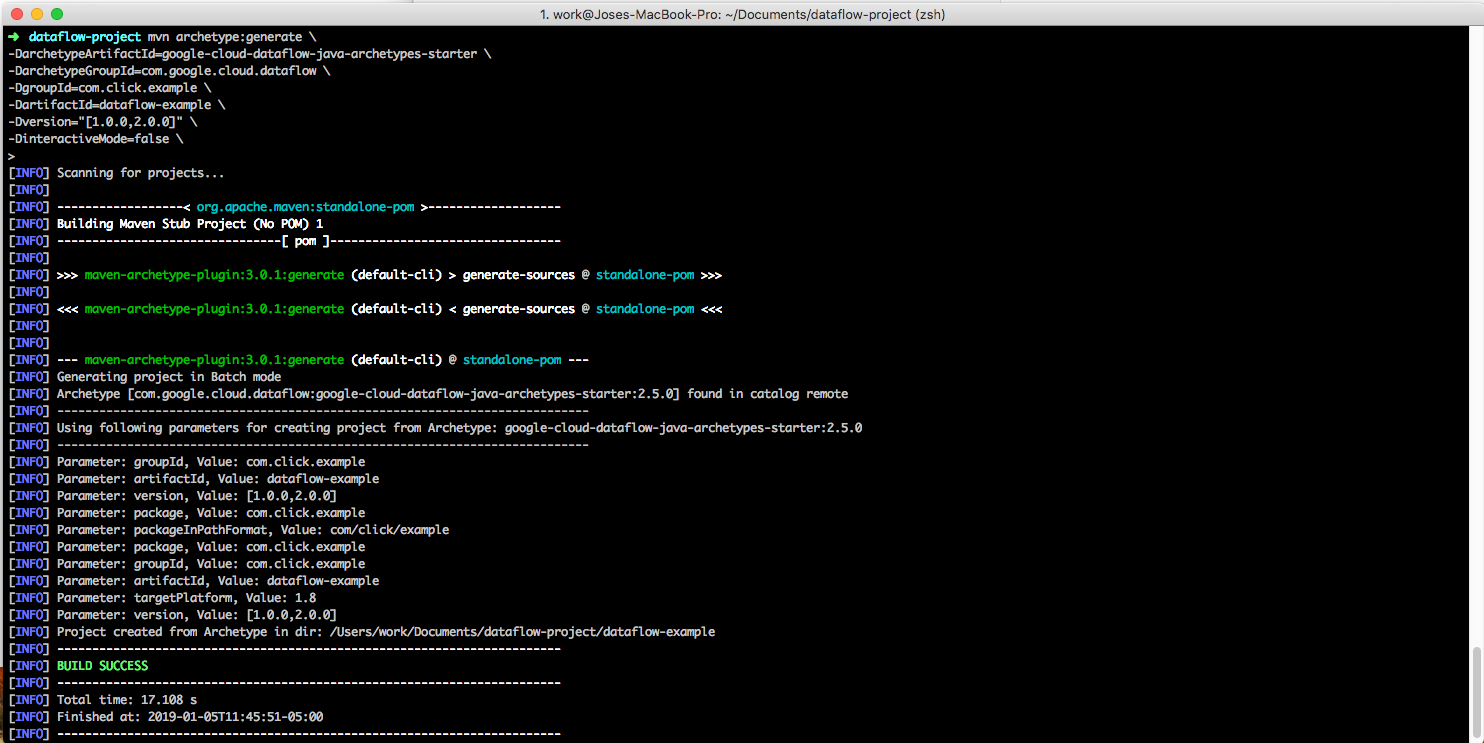

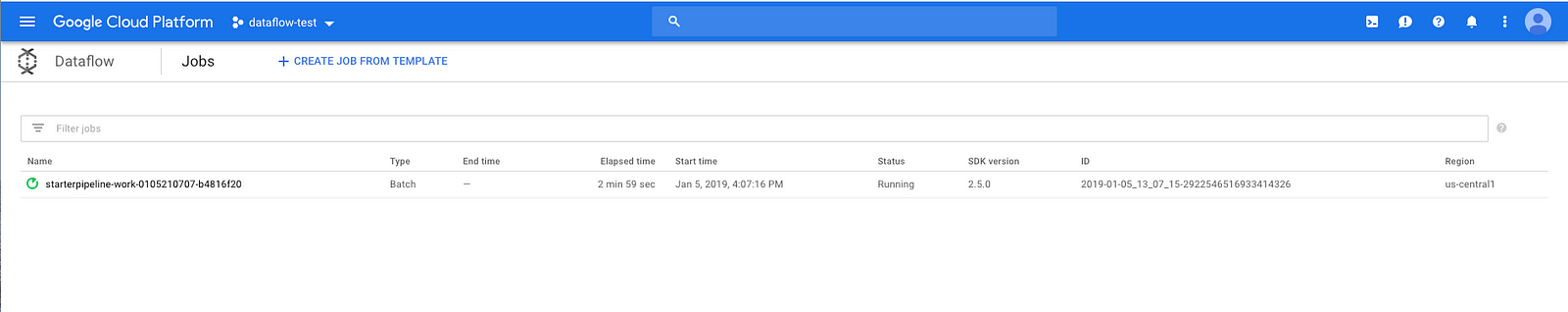

Cloud Dataflow is a managed service for executing a wide variety of data processing patterns. This post will explain how to create a simple Maven project with the Apache Beam SDK in order to run a pipeline on Google Cloud Dataflow service. One advantage to use Maven, is that this tool will let you manage external dependencies for the Java project, making it ideal for automation processes. This project execute a very simple example where two strings “Hello” and “World" are the inputs and transformed to upper case on GCP Dataflow, the output is presented on console log. In order to work you will need to Enable the APIS, set up authentication and Set Google Application credentials. This Buckets will contain jar files and temporal files if necessary. a. On Service Account option, select New Service account. b. Enter a name for service account name, in this case will be dataflow-service. c. Role will be owner If you don’t set the google application credentials properly you might not access the google buckets and probably will se the following error The Maven Archetype Plugin allows the user to create a Maven project from an existing template called an archetype. The following command generates a new project from google-cloud-dataflow-java-archetypes-starter This command will generate a example Java class named StarterPipeline.java that contains the Apache Java Beam code that define pipeline steps. To compile and run the main method of the Java class with arguments, you need to execute the following command. — project: The project id in this case dataflow-test-227715. — stagingLocation: Staging folder in a GCP Bucket. — tempLocation: Temp folder location in GCP Bucket. — runner: set to DataflowRunner to run on GCP. Go to Dataflow dashboard and you should see a new job created and running. You should see the deferents steps and when finish the words ‘HELLO’ and ‘WORLD’ on upper case on the log console.

Disclaimer: Purpose of this post is to present steps to create a Data pipeline using Dataflow on GCP, Java code syntax is not going to be discussed and is beyond this scope. Hope to make in the future some specific tutorials on this.

0. Pre-requisites

export GOOGLE_APPLICATION_CREDENTIALS="my/path/dataflow-test.json"

An exception occured while executing the Java class. Failed to construct instance from factory method DataflowRunner#fromOptions(interface org.apache.beam.sdk.options.PipelineOptions): InvocationTargetException: DataflowRunner requires gcpTempLocation, but failed to retrieve a value from PipelineOptions: Error constructing default value for gcpTempLocation: tempLocation is not a valid GCS path …

1. Use java data flow archetype

mvn archetype:generate \

-DarchetypeArtifactId=google-cloud-dataflow-java-archetypes-starter \

-DarchetypeGroupId=com.google.cloud.dataflow \

-DgroupId=com.click.example \

-DartifactId=dataflow-example \

-Dversion="[1.0.0,2.0.0]" \

-DinteractiveMode=false \

2. Run Java main from Maven

mvn compile exec:java -e \

-Dexec.mainClass=com.click.example.StarterPipeline \

-Dexec.args="--project=dataflow-test-227715 \

--stagingLocation=gs://example-dataflow-stage/staging/ \

--tempLocation=gs://example-dataflow-stage/temp/ \

--runner=DataflowRunner"

3. Check Job is created

4. Open Job